Tutorial Series: Add Interactive Touch Objects with AS3 & GML: Method 2

Tutorial 2: Add GML-Defined Manipulations

Introduction

In this tutorial we are going to create a simple touch object using actionscript and attach a GML-defined multitouch gesture. This method uses the built-in automatic transform methods to directly control how touch objects are manipulated by gestures. This tutorial requires Adobe Flash CS5+ and Open Exhibits 2 (download the sdk).

Getting Started

The first step in creating an interactive touch object is to construct a new instance of a TouchSprite object. This is done using methods similar to creating a Sprite. For example:

As with Sprites and MovieClips; images can be dynamically loaded directly into the display object.

3

4

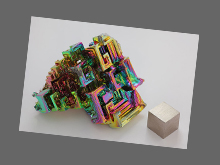

Loader0.load(new URLRequest("library/assets/crystal0.jpg"));

ts0.addChild(Loader0);

TouchSprites and TouchMovieClips inherit the complete set of public properties available to Sprites and MovieClips. This allows the display object properties to be treated in the same way. For example: the following code positions, rotates and scales then places the touch sprite “ts0” on the stage:

6

7

8

9

10

ts0.y = 100;

ts0.rotation = 45;

ts0.scaleX = 0.5;

ts0.scaleY = 0.5;

addChild(ts0);

Unlike Sprites and MovieClips, all TouchSprites and TouchMovieClips have the ability to detect and process multitouch gestures as defined by the root GML document. All touch objects in a GestureWorks3 application have access to the gestures defined in the root GML document located in the bin folder. However the touch objects will only respond to specific touch gestures when explicitly attached. To attach and activate gestures on a touch object you must add the gesture to the gestureList property and set it to true. This can be done using an in-line method for example:

This adds the gesture “n-drag” to the touch object effectively activating gesture analysis on “ts0“. Any touch point placed on the touch object is added to the local cluster. The touch object will inspect touch point clusters for a matching gesture “action” and then calculate cluster motion in the x and y direction. The result is then processed and prepared for mapping.

The traditional event model in Flash employs the explicit use of event listeners and handlers to manage gesture events on a touch object. However, Gesture Markup Language can be used to directly control how gesture events map to touch object properties and therefor how touch objects are transformed. These tools are integrated into the gesture analysis engine inside each touch object and allow custom gesture manipulations and property updates to occur on each touch object.

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

<match>

<action>

<initial>

<cluster point_number="0" point_number_min="1" point_number_max="5" translation_threshold="0"/>

</initial>

</action>

</match>

<analysis>

<algorithm>

<library module="drag"/>

<returns>

<property id="drag_dx"/>

<property id="drag_dy"/>

</returns>

</algorithm>

</analysis>

<processing>

<inertial_filter>

<property ref="drag_dx" release_inertia="false" friction="0.996"/>

<property ref="drag_dy" release_inertia="false" friction="0.996"/>

</inertial_filter>

</processing>

<mapping>

<update>

<gesture_event>

<property ref="drag_dx" target="x" delta_threshold="true" delta_min="0.01" delta_max="100"/>

<property ref="drag_dy" target="y" delta_threshold="true" delta_min="0.01" delta_max="100"/>

</gesture_event>

</update>

</mapping>

</Gesture>

In this example the gesture “n-drag” as defined the root GML document “my_gestures.gml” directly maps the values returned from gesture processing “drag_dx” and “drag_dy” to the “target” “x” and “y“. Internally the delta values are added to the “$x” and “$y” properties of the touch object. This translates the object on stage to the center of the touch point cluster.

This process can be interrupted or stopped during runtime by simply setting the gesture to false in the gestureList. For example:

This will halt the continuous “n-drag” gesture analysis and processing on the touch object without removing it, enabling it to be re-engaged later if required.

The benefit of using the GML to handle gesture events is that complex gesture based manipulations can be added to touch objects in a few simple lines of code. The manipulations are internally managed by the TransformManager class integrated into TouchSprite and TouchMovieClip. Any transformations that occur on a touch object can be inspected if required by listening to GestureEvents or TransformEvents but can otherwise be managed entirely “automatically”.

This method mimics methods of best practice used when dynamically assigning object based media assets and formatting, providing a framework that fully externalizes object gesture descriptions and interactions. The result of this approach allows developers to efficiently refine UI/UX interactions without the need to recompile applications.

Note: This method (method 2) defines a work-flow that uses a combination of actionscript public methods (available in Open Exhibits 2) to create and manage touch objects in association GML controlled gesture interactions. As with all methods and work-flows that use GestureWorks3 gestures actions and event types are defined by the GML document “my_gestures.gml”.