Tutorial Series: Add Interactive Touch Objects with AS3 & GML: Method 1

Tutorial 1:Simple Manual GestureEvent Control Using ActionScript 3 and GML

Introduction

In this tutorial example we are going to use actionscript to add a simple (GML defined) multitouch gesture to a touch object, then add a gestureEvent Listener and Handler to manage what is done when a gestureEvent is detected. This tutorial requires Adobe Flash CS5+ and Open Exhibits 2 (download the sdk).

How to add a GestureEvent Listener

First, we need to construct a new instance of a touchSprite object. This is done using methods similar to creating Sprite. For example:

A bitmap image can then be dynamically loaded and placed into the touch object “myTouchSprite“.

3

4

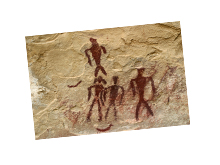

Loader0.load(new URLRequest("library/assets/cave_art.jpg"));

myTouchSprite.addChild(Loader0);

The touchSprite class directly extends the Sprite class, this means that a touchSprite object inherits all of the public properties available to a Sprite object such as the x, y, rotate, scaleX and scaleY display properties.This enables the touch object to be positioned, rotated and scaled then placed on stage. For example:

6

7

8

9

10

myTouchSprite.y = 150;

myTouchSprite.rotation = 45;

myTouchSprite.scaleX = 0.5;

myTouchSprite.scaleY = 0.5;

addChild(myTouchSprite);

The first step in adding touch interaction to touch objects is the local activation of all required gestures. To do this we add the gesture (as identified by the “gesture_id” in the GML root document) to the gestureList property associated with the touch object. For example (using the in-line method for adding items to an object list):

This adds the gesture “n-drag” to the touch object effectively activating gesture analysis on “myTouchSprite” which inspects the touch object for matching gesture “action” and calculates point motion. The result is then processed and prepared for dispatch in the form of a gestureEvent.

This enables gestureEvents to be continually*dispatched when a gesture is recognized on the touch object (as defined in the GML). At this stage if a listener and handler are not added to the touch object nothing is done with the gestureEvent. To actively monitor gestureEvents on the touch object we will need to add an event listener.

This attaches a “DRAG” gestureEvent listener to the touch object and calls the function “gestureDragHandler” when an event is dispatched. The “gestureDragHandler” function is used to define what happens when a “DRAG” gestureEvent is detected on the touch object. For example:

14

15

16

17

{

event.target.x += event.value.dx;

event.target.y += event.value.dy;

}

When this function is called the event values dx and dy are added to the current values of the event target (myTouchSprite) x and y properties. The effect in this case is to move the touch object on the x and y axis. When touch points are placed on the touch object and moved across the screen the touch object is “dragged” in the same direction by the same distance as the average motion of the touch point cluster.

Using actionscript to construct touch objects and add gestures allows full control of the touch interactions defined in the root GML document. This efficiently externalizes gesture descriptions and enables further refinement of UI/UX without the need to recompile applications.

*GestureEvents are continually dispatched if the match conditions are continually met. If no touch points are detected on a touch object no gesture events are fired. This ensures dormant touch objects do not require unnecessary processing.

Note: In addition to the actionscript methods used in this tutorial there are new “Native” (CML) methods which allow the construction of new touch objects using a secondary external XML document in addition to the GML document.